7 deadly mistakes to avoid in Google Webmaster Tools Console

The Google Webmaster Tools is a free service that allows webmasters to submit their websites & sitemaps to Google, affect their indexing process, configure how the search engine bots crawl their sites, get a list of all the errors that were detected on their pages and get valuable information, reports and statistics about their websites. Certainly it is a very powerful console that can help Webmasters not only optimize their websites but also control how Google accesses their sites.

The Google Webmaster Tools is a free service that allows webmasters to submit their websites & sitemaps to Google, affect their indexing process, configure how the search engine bots crawl their sites, get a list of all the errors that were detected on their pages and get valuable information, reports and statistics about their websites. Certainly it is a very powerful console that can help Webmasters not only optimize their websites but also control how Google accesses their sites.

Unfortunately though, even if using the basic functionalities and changing the default configuration is relatively straight forward, one should be very careful while using the advanced features. This is because some of the options can heavily affect the SEO campaign of the website and change the way that Google evaluates it. In this article we discuss the 7 most important mistakes that one can make while using the advanced features of Google Webmaster Tools and we explain how to avoid a disaster by configuring everything properly.

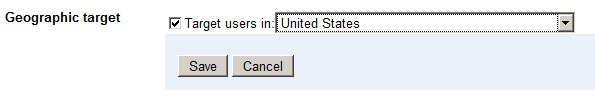

1. Geographic Targeting feature

The Geographic Targeting is a great feature that allows you to link your website to a particular location/country. It should be used only when a website targets to users from a particular country and when it does not really make sense to attract visitors from other countries.

This feature is enabled if your domain has a generic (neutral) top-level-domain such as .com, .net, .org etc while it is preconfigured if it has a country specific TLD. By pointing your site to a particular country you can achieve a significant boost on your rankings on the equivalent Country version of Google. So for example by setting the Geographic Targeting to France you can positively affect your rankings on Google.fr. Unfortunately this will also lower your rankings on the other Google Country versions. So this means that you might be able to boost your rankings on Google.fr but you can actually hurt your results on Google.com and Google.co.uk.

So don’t use this feature unless your website targets only on people from a specific country.

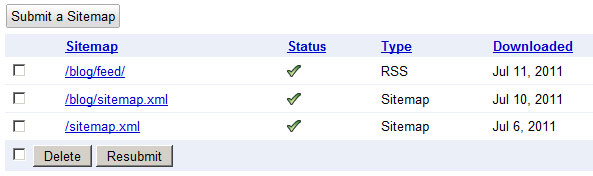

2. Sitemap Submission

Google allows you to submit via the Console a list of sitemaps and this will speed up drastically the indexing of your website. Even though this is a great feature for most of the webmasters, in few cases it can cause two major problems.

The first problem is that sometimes the XML sitemaps appear on Search Results, so if you don’t want your competitors to be able to see them make sure you gzip them and submit the .gz version on Google. Also obviously you shouldn’t name the files “sitemap.xml” or add their URLs on your robots.txt file.

The second problem appears when you index a large number of pages (several hundred thousands) really really fast. Even though Sitemaps will help you index those pages (given that you have enough PageRank), you are also very likely to reach a limit and raise a spam flag for your website. Don’t forget that the Panda Update targets particularly on websites with low quality content that try to index thousands of pages very fast.

So if you want to avoid exposing your site architecture be extra careful with sitemap files and if you don’t want to reach any limitation use a reasonable number of sitemaps.

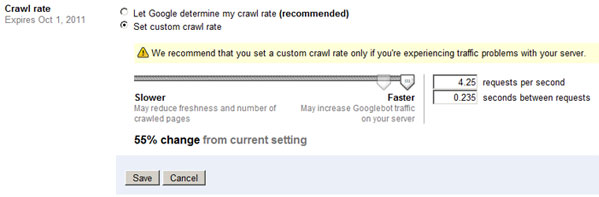

3. Setting the Crawl Rate

Google gives you the ability to setup how fast or slow you want Googlebot to crawl your website. This will not only affect the crawl rate but also the number of pages that get indexed every day. If you decide that you want to change the default setting and set a custom crawl rate remember that a very high crawl rate can consume all of the bandwidth of your server and create a significant load on it while a very low crawl rate will reduce the freshness and the number of crawled pages.

For most webmasters it is recommended to let Google determine their crawl rate.

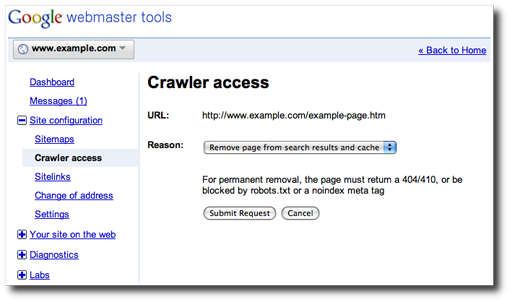

4. Crawler Access

On the Crawler Access Tab, Google gives you the opportunity to see which parts of your website are allowed to be crawled by Google. Also it gives you the opportunity to Generate a robots.txt file and request a URL Removal. These features are powerful tools in the hands of experienced users, nevertheless a misconfiguration can lead to catastrophic results affecting heavily the SEO campaign of the website. Thus it is highly recommended not to block any parts of your website unless you have a very good reason for doing it and you know exactly what you are doing.

Our suggestion is that most webmasters should not block Google from any directory and let it crawl all of their pages.

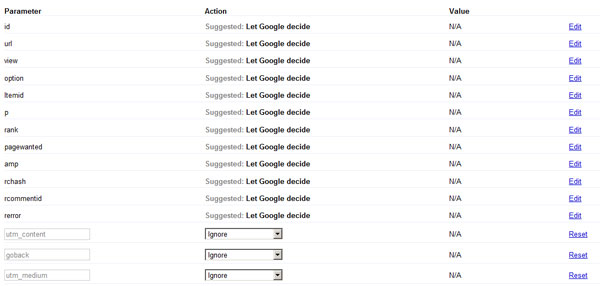

5. Parameter handling

After crawling both your Website and the external links that point to your pages, Google presents to you the most popular URL parameters that are passed to your pages. In the Parameter Handling console Google allows you to select whether a particular parameter should be “Ignored”, “Not Ignored” or “Let Google decide”.

These GET parameters can be from product Ids to Session Ids and from Google Analytics campaign parameters to page navigation variables. These parameters in many cases can lead to duplicate content issues (for example Session IDs or Google Analytics Campaign parameters), while in other cases they are essential parts of your website (product or category IDs). A misconfiguration on this console could lead to disastrous results on your SEO campaign because you can either make Google index several duplicate pages or make it drop from the index useful pages of your website.

This is why it is highly recommended to let Google decide how to handle each parameter, unless you are absolutely sure what a particular param is all about and whether it should be removed or maintained. Last but not least, I remind you that this console is not the right way to deal with duplicate content. It is highly recommended to work with your internal link structure, use 301s and Canonical URLs before trying to ignore or block particular parameters.

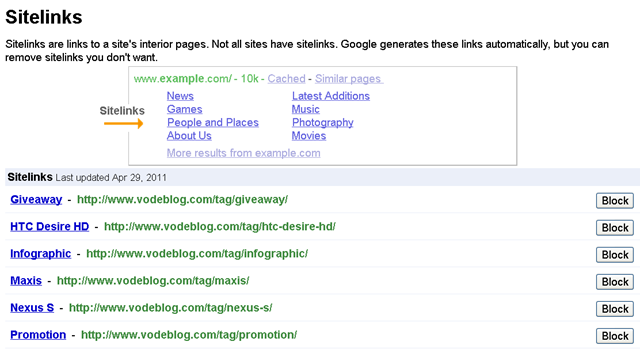

6. Sitelinks

As we explained several times in the past, the sitelinks are links that appear under some results in SERPs in order to help users navigate the sites easier. The sitelinks are known to attract user attention and they are algorithmically calculated based on the link structure of the website and the anchor texts that are used. Through the Google Webmaster Tools console the webmaster is able to block the Sitelinks that are not relevant, targeted or useful to the users. Failing to remove the irrelevant sitelinks or removing the wrong sitelinks can lead to low CTR on Search Engine Results and thus affect the SEO campaign.

Revisiting regularly the console and providing the appropriate feedback about each unwanted sitelink can help you get better and more targeted sitelinks on the future.

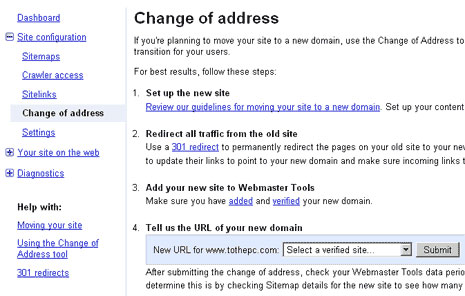

7. Change of Address

The Change of Address option is available only for domains and not for subdomains or sub directories. From this console you are able to transfer your website from a particular domain to a new one. Most of the users will never need to use this feature nevertheless if you find yourself in this situation you need to proceed with caution. Using 301 redirections and mapping the old pages to the new ones are extremely important to make the transition successful. A misconfiguration can cause a lot of problems nevertheless it is highly unlikely that someone will accidentally set up this option.

Avoid using this feature when your old domain has been banned by Google and have always in mind that changing your domain will have a huge impact on your SEO campaign.

The Google Webmaster Tools is a great service because it provides all the necessary resources to the webmasters in order to optimize, monitor and control their websites. Nevertheless caution is required while changing the standard configuration because mistakes can become very costly.

32 Comments

32 Comments