Google’s AJAX crawling scheme and its effects on SEO

Almost 2 years ago, Google has proposed a new way of making the AJAX applications & websites crawlable. As most of you know, search engines are very good in indexing and analyzing HTML documents but they are not particularly good in understanding JavaScript and thus crawling AJAX content. Even though 2 years have passed only a small amount of websites have properly implemented Google’s suggestion. Moreover despite the fact that Google has started to crawl, index and present such AJAX pages on SERPs, other search engines like Bing do not support this new “method”.

Almost 2 years ago, Google has proposed a new way of making the AJAX applications & websites crawlable. As most of you know, search engines are very good in indexing and analyzing HTML documents but they are not particularly good in understanding JavaScript and thus crawling AJAX content. Even though 2 years have passed only a small amount of websites have properly implemented Google’s suggestion. Moreover despite the fact that Google has started to crawl, index and present such AJAX pages on SERPs, other search engines like Bing do not support this new “method”.

The reason why I write this article is to briefly explain what AJAX is, to discuss the problem of crawling AJAX content, to view the various solutions that have been proposed, to discuss the common mistakes that web developers make while using non Search Engine friendly technologies like AJAX & Flash and finally to explain whether or not you should use Google’s proposal. Even though this topic is a bit advanced and it requires basic knowledge of the web development techniques and of the web technologies in general, I try to focus less on the programming part and more on the SEO part. If you have any questions feel free to post them on the comments below and we’ll try to cover them on an upcoming blog post.

What is AJAX?

The AJAX is an acronym for Asynchronous Javascript And Xml. It is a set of web development techniques that allow software engineers to create interactive web applications. By using Javascript the browser interacts with the user and sends web requests to the server which is replying in XML (also in JSON or HTML). The AJAX is usually used to update specific parts of the HTML page without causing a redirect or a page refresh. Also the AJAX methods allow you to create fast web applications that reduce significantly the loading time and provide a better user experience. Explaining more about AJAX is beyond the scope of this article, nevertheless if you are a developer and you want to learn more I strongly recommend you to read the AJAX tutorial by W3scools.

The Problem of Crawling AJAX

The main problem of crawling AJAX is that it heavily relies on JavaScript which is a client-side scripting language that runs on Browser (Internet Explorer, Mozilla, Opera, Chrome etc). Moreover different browsers support different features and functions (even though this has started to change over the years). Last but not least, executing JavaScript requires additional resources and this increases the costs for the search engines. As we discussed in the past, when a particular method increases the operating costs of the Search Engines, they give incentives to the webmasters to avoid using such techniques or they work around the problem by proposing less costly approaches.

Even though Google has admitted that they took steps in order to understand better JavaScript, Flash and HTML Forms, still it is not recommended to rely on these technologies since they are not Search Engine friendly. Also as you will see below, the solutions that have been proposed for crawling AJAX do not rely on executing JavaScript (which would increase the costs for Search Engines) but instead they make webmasters change their Website architecture to make it SEO friendly.

Solutions for Crawling AJAX

The 2 most popular techniques that have been proposed over the years are the Hijax Approach and the AJAX crawling scheme of Google.

The Hijax technique

According to the Hijax technique, when you have a link that executes AJAX or JavaScript, you should not code it like this:

Neither like this:

Both of the above approaches are very popular to web developers but unfortunately they do not provide a meaningful URL that can be used by the search engines. By using the Hijax technique the above link should be rewritten as follows:

The above code will redirect the search engine to the targeted page if the JavaScript is turned off but at the same time it will fire up the AJAX code if the JavaScript is turned on (obviously the someFunction method should handle the click and load the AJAX content to the user). As a result both the users and the search engines will be able to access the content of the linked page.

Of course the above technique has several limitations since it does not cover cases where the AJAX content is created dynamically based on the input of the user.

The AJAX crawling scheme of Google

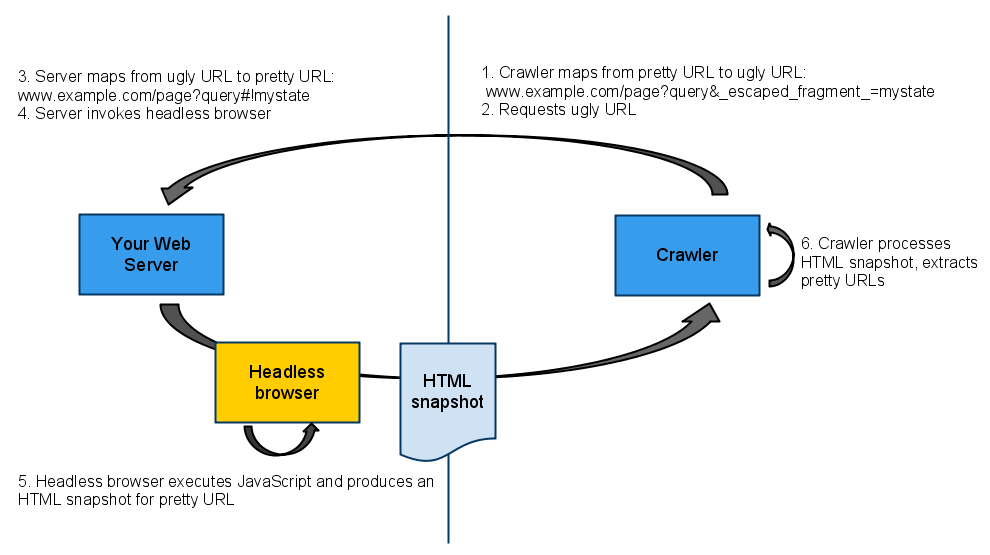

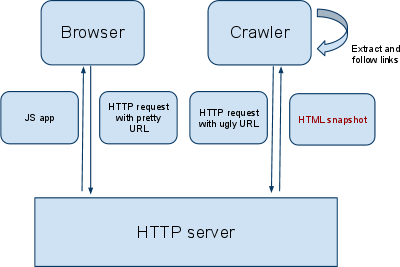

The Google’s AJAX crawling scheme proposes to mark the addresses of all the pages that load AJAX content with specific chars. The whole idea behind it is to use special hash fragments (#!) in the URLs of those pages to indicate that they load AJAX content. When Google finds a link that points to an “AJAX” URL, for example “http://example.com/page?query#!state”, it automatically interprets it (escapes it) as “http://example.com/page?query&_escaped_fragment_=state”.

The programmer is forced to change his/her Website Architecture in order to handle the above requests. So when Google sends a web request for the escaped URL, the server must be able to return the same HTML code as the one that is presented to the user when the AJAX function is called.

After Google sees the “AJAX URL” and after interpreting (escaping it), it grabs the content of the page and indexes it. Finally when the indexed page is presented in the Search Results, Google shows the original AJAX URL to the user instead of the “escaped” one. As a result the programmer should be able to handle user’s request and present the appropriate content when the page loads.

So as you probably understand Google proposed a way to make the AJAX content crawlable without executing JavaScript (those PhDs are clever, aren’t they?). This technique is more generic than Hijax as it covers more cases, but it is much more complicated, it requires additional coding and it is currently supported only by Google.

Common Web Development mistakes

As we saw above, one of the most common mistakes that web developers make is that they don’t provide in the JavaScript links a meaningful URL for the search engines. Another thing that many web developers ignore is that according to the web specifications & protocols every URL parameter contained after the “#” symbol (hash fragments) is NEVER sent to the web server. So the following URLs create exactly the same web requests to the server:

http://example.com/#param1=1¶m2=2

http://example.com/#/directory/page.html

http://example.com/#/directory/page.html?param=1

All the above links will generate a web request to the URL http://example.com/ and all the extra parameters after # will totally be ignored. That is why Search Engines ignore everything after # (We’ll talk about “#!” a bit later, but yes it creates also the same web request on the server as the previous URLs).

SO! Based on the above we conclude that most of the AJAX or Flash techniques that promise SEO friendly URLs by using hash fragments are NOT working. Some of them even bother to change the title and the text of the holding page by using JavaScript in order to make the websites more SEO friendly. Don’t lose your time with those techniques because they don’t work. If you rely on JavaScript code to make your website SEO friendly you are going the wrong way!

The only exception to the above rule is when the #! is used. So you might be thinking that if you use #! instead of # you will be ok. Unfortunately the answer is NO! Just by using it you will gain nothing. You must also write code in PHP, JSP, ASP or ASP.NET in order to ensure that your server will handle the Google’s AJAX crawling scheme and present the appropriate holding page (as we explained above).

Should you use Google’s proposal? Let’s focus on a case study.

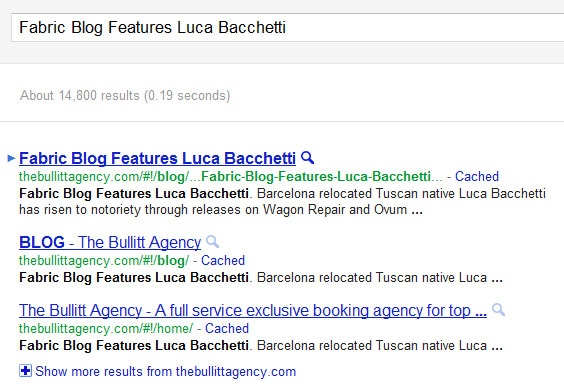

Currently Google’s AJAX crawling scheme has been implemented by a relatively small amount of websites and many of them have not done it properly. One of the websites where it has been done a pretty good job is the thebullittagency.com (note that it is not related to our company).

First of all let’s run the query “Fabric Blog Features Luca Bacchetti” on Google. The URL of the first result is the following:

http://thebullittagency.com/#!/blog/21-Fabric-Blog-Features-Luca-Bacchetti-

Note that the title and the snippet on SERPs are unique and come from the particular blog post. Now let’s see how the escaped URL looks like:

http://thebullittagency.com/?&_escaped_fragment_=/blog/21-Fabric-Blog-Features-Luca-Bacchetti-

As required from the AJAX crawling scheme they return more or less the same HTML content as the one that they load with AJAX. Note that if you want to be 100% safe you should present exactly the same code to avoid automated bans for cloaking.

Now let’s see how Google handles the PageRank for the AJAX URLs. Let’s check the PageRank values by using our PageRank Check tool. (Note that all of our tools handle the AJAX crawling scheme). Here are the results:

http://thebullittagency.com/?&_escaped_fragment_=/blog/ – PageRank: 3

http://thebullittagency.com/ – PageRank: 4

So Google does handle the links and the PageRank values differently for the first 2 URLs since they don’t have the same PR value as the homepage. Also the escaped URL (the second one) has exactly the same value as the first one. This is what we should expect after all since Google makes it clear that they handle those 2 URLs as the same. This is actually good news because it means that if someone decides to add a link to the blog post, all the link juice and the anchor text info will pass to the actual article and not to the homepage.

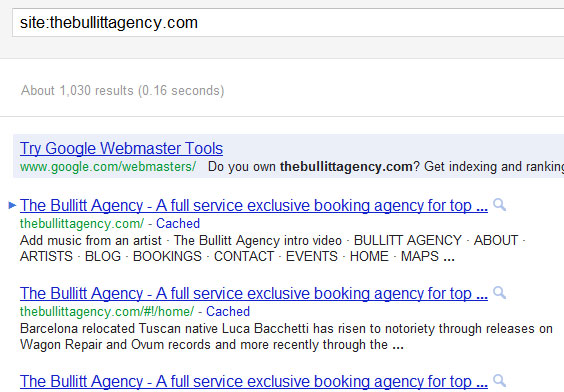

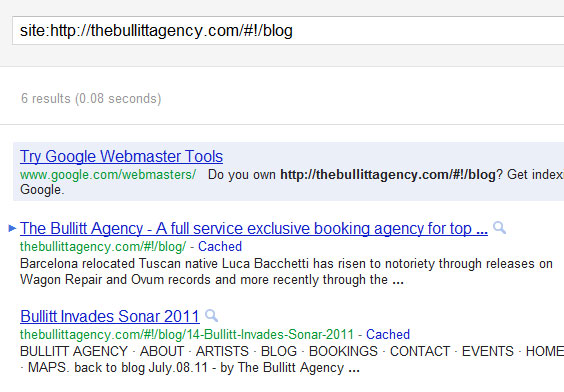

Now let’s see how many pages are indexed. If we search on Google the query “site:thebullittagency.com”, we’ll get more than 1 thousand results which means that the website is normally indexed. Also if we try the query “site:http://thebullittagency.com/#!/blog” we’ll get all the articles that have been written on their blog. So Google’s AJAX crawling scheme is safe to use, right? Nope!

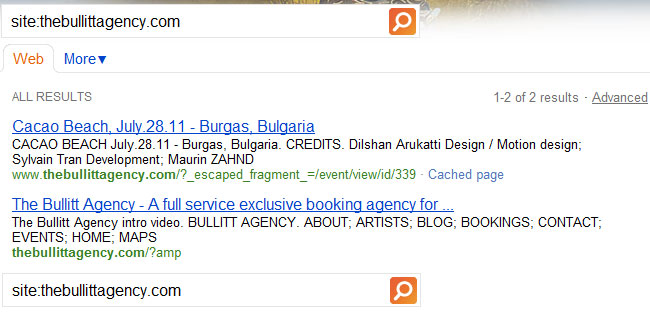

Let’s do the query “site:thebullittagency.com” on Bing. Well, the things don’t look that good there. There are only 2 results, the homepage and an ugly escaped URL that was probably submitted either manually or linked directly from an external source. So Bing does not handle at all those URLs, they ignore everything after #! and when a link is placed on an internal page they pass all the juice on the homepage.

But if this is true then why twitter.com is indexed properly on Bing? The answer is that Twitter does use the #! in their URLs but when a search engine requests the “http://twitter.com/username” version of the page they provide the HTML normally. Of course if a user tries to access this version they do a sneaky JavaScript redirect to the #! version by using the following code: “window.location.replace(`/#!/Username`);”. Why Twitter uses this approach? Because by using AJAX they have less page refreshes, they improve their loading time and they reduce their operating costs (less servers, more available bandwidth etc).

The above technique is not a generic method that will help you index AJAX content and it is extremely dangerous since it violates the policy of Google concerning JavaScript redirects. It might be ok if you are Twitter (which had its Toolbar PageRank value dropped few months ago), but it is definitely not ok if you are a simple webmaster.

Conclusions

For me as a programmer the AJAX crawling problem is far from resolved. Google has proposed a clever and low cost for them solution for crawling AJAX content, nevertheless this approach is complicated and really costly for the developers. That is why 2 years after the proposal of the AJAX crawling scheme a very small amount of websites have actually implemented properly. Moreover we should note that currently only Google supports this scheme and by using it you risk losing the traffic that you receive from the other search engines.

When should you use it? Perhaps you can use Google’s method when you have no other choice. Personally I believe that you always have the choice of not using AJAX technology on pages that are important for Search Engines. If I had to use AJAX, I would go for the Hijax technique that is easier, safer and supported by all search engines.

If you feel confused with all these, I strongly recommend you to stay away from AJAX and don’t use it on your money-making landing pages. If you have questions or suggestions feel free to leave your comment below. Last but not least don’t forget to share this article if you found it useful. Sharing is caring! 🙂

3 Comments

3 Comments